AI Search Volatility: Why AI search results keep changing

AI search engines like ChatGPT and Google AI Overviews don't give you the same answer twice. That's because they're built to be probabilistic rather than deterministic. When you ask a question, these models predict what comes next while throwing in controlled randomness to keep responses from becoming repetitive or predictable.

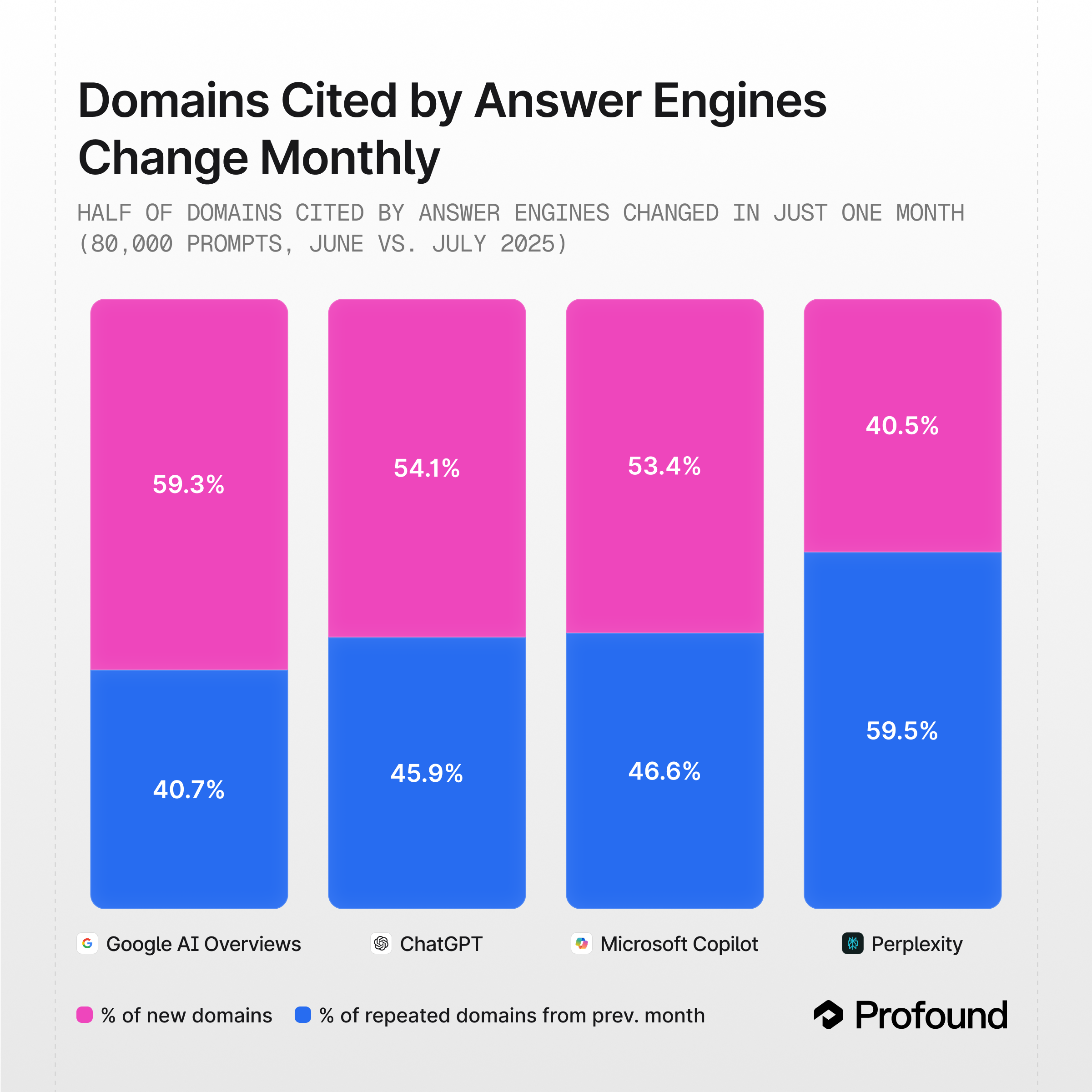

But here's what catches most people off guard: the sources these AI tools cite change dramatically over time, even for identical questions. Our recent analysis of citation patterns across major platforms reveals a fundamental challenge for anyone trying to understand their AI visibility.

Study methodology: Measuring citation consistency over time

We ran a comprehensive analysis comparing citation patterns across a one-month period. Here's how we did it:

- Time Frame: June 11-13 ↔ July 11-13, 2025

- Query Type: Open-ended prompts designed to elicit source citations

- Sample Size: ~80,000 prompts tested per platform

- Measurement: Domain-level citation drift analysis

We measured "citation drift" as the percentage of domains that appeared in July responses but weren't present in June responses for each prompt that appeared in both windows. This metric shows, on average, how much citation behavior shifts for the same prompts over time.

Results: Citation drift across major platforms

This means that roughly 40-60% of the domains cited in AI responses will be completely different just one month later, even for identical questions. Each platform shuffles its sources in its own way, pointing to very different algorithms under the hood.

The volatility becomes even more pronounced over longer time periods. These numbers balloon to 70-90% when comparing January citation domains to July citation domains, showing a roughly linear increase in citation drift that makes any sporadic monitoring approach essentially meaningless.

What this means for AI visibility strategy

This level of citation volatility completely changes how we need to think about AI answer engine optimization. Unlike traditional search, where rankings provide relatively stable benchmarks (Google typically does four to five core algorithm updates per year), AI citation patterns are inherently unstable.

The strategic challenges

These challenges don't mean AI visibility is impossible to track. They just require a fundamentally different approach than traditional SEO monitoring. The key is building systems that account for the probabilistic nature of AI search rather than fighting against it.

Essential monitoring principles

The good news is that once you understand these principles, you can build monitoring systems that actually work with AI volatility instead of against it. You start to see patterns emerge from the noise, and your AI visibility strategy becomes much more predictable.

For deeper background on how platform citation patterns differ, see our Citation Overlap Strategy research and the companion AI Platform Citation Patterns study.

The bottom line

Citation drift isn't a bug in AI systems. It's an inherent feature of how these probabilistic models operate. Randomness prevents repetitive responses, pulls in different perspectives, and adapts to changing information landscapes.

The question isn't whether your content will appear consistently in AI responses. It's whether you'll have the systematic monitoring and analysis capabilities to understand when, why, and how often it appears, and how that performance evolves over time.

Ready to implement systematic AI visibility tracking? Get a demo of Profound and see how we turn AI volatility into your competitive edge.