For the past year, a popular theory has emerged across some SEO and GEO communities: if you want your content to show up more in ChatGPT, Perplexity, and other AI tools, serve Markdown instead of HTML to their crawlers. The logic seemed sound, Markdown is cleaner, more structured, easier for LLMs to parse. Several high-profile sites reported gains after implementing the switch.

But most of these stories have been anecdotal.

So we decided to run a proper controlled test.

TL;DR: We A/B tested 381 pages across six sites. Markdown pages saw ~1 extra median bot visit over three weeks. Not nothing, but not the game-changer some have hypothesized.

What we tested

We partnered with six real websites across different niches and randomly split 381 pages into two groups:

- Control group (189 pages): AI bots saw the same HTML that human visitors see

- Treatment group (192 pages): AI bots saw clean Markdown versions of the same content

Human visitors always saw HTML, so there was no impact. We used software to detect bot traffic and serve the appropriate format on the fly.

Then we measured bot visit volume over three weeks (January 19 – February 8, 2026) using Profound’s Agent Analytics. We tracked crawlers and user-triggered agents from OpenAI, Anthropic, Perplexity, Meta, and DuckAssistBot, the bots that power today's AI answer engines.

The result: no clear winner

If Markdown were a game-changer, we would have seen it at this scale. We didn't.

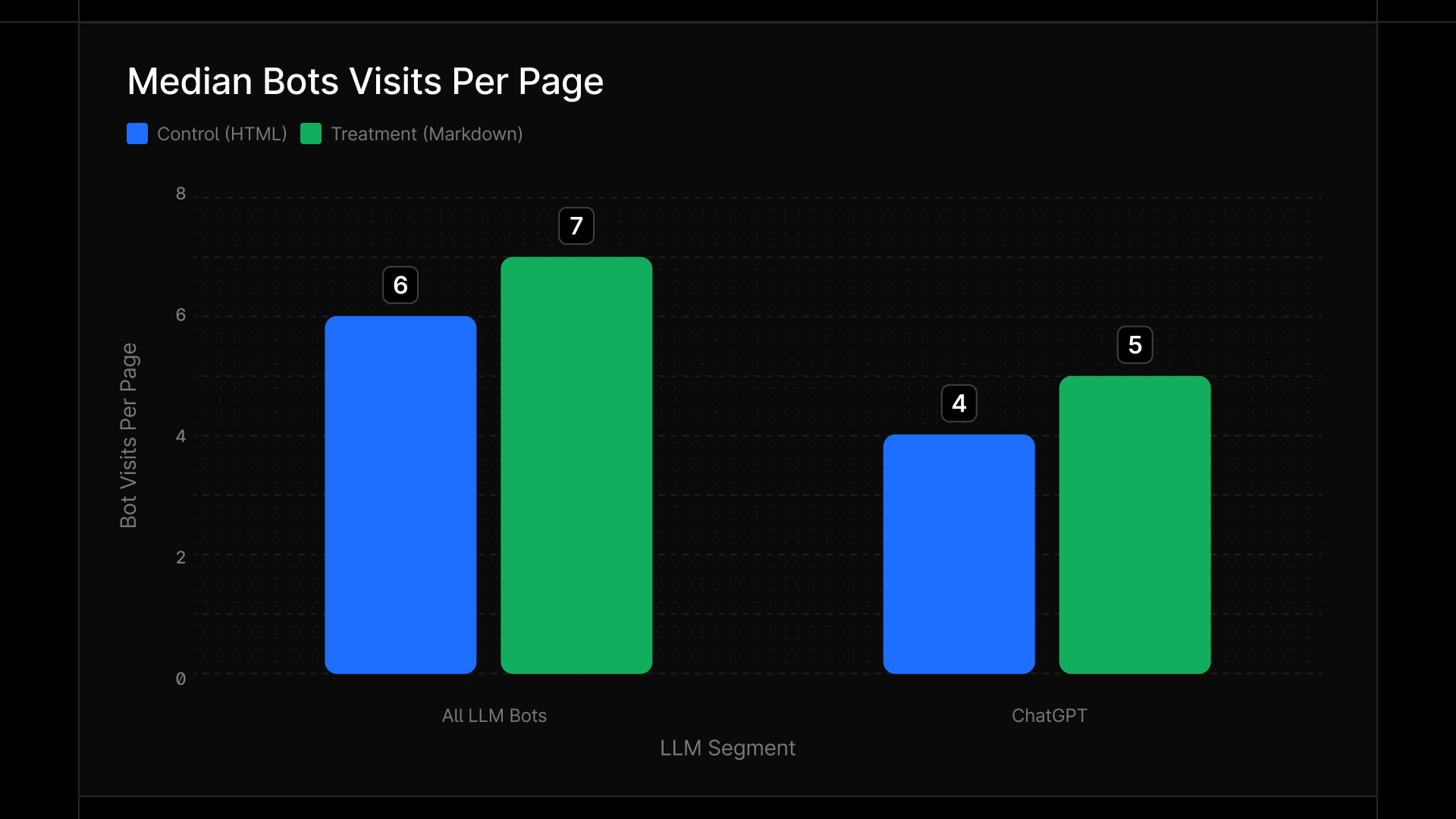

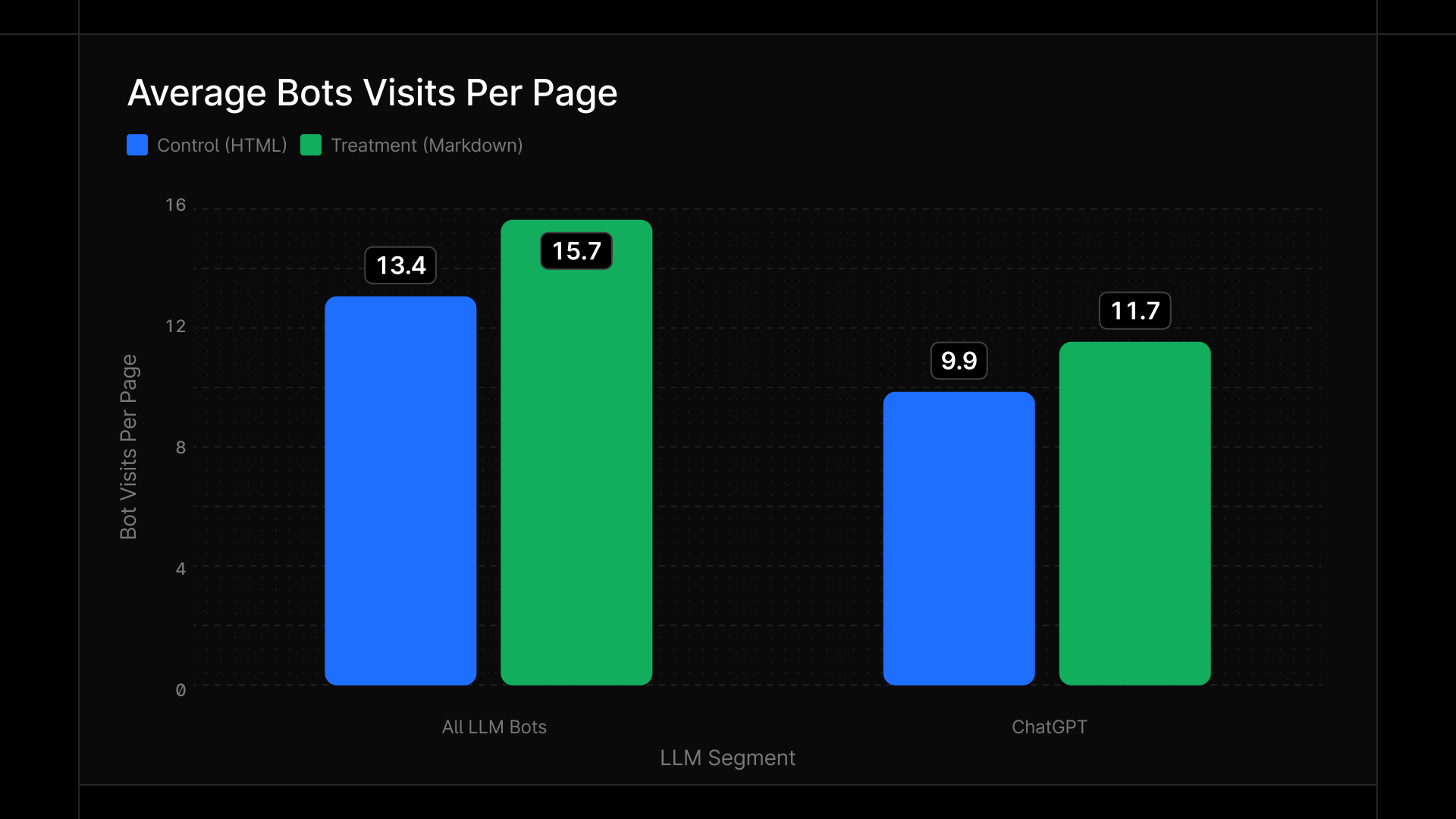

At first glance, the Markdown group looks slightly ahead: one more median visit per page, about 16% higher on average. But here's the catch: the median page (your typical page) only got one extra visit. The 16% mean difference is driven almost entirely by high-traffic pages in the tail of the distribution, pages that were already getting lots of bot attention saw modest gains, while the median page saw almost nothing.

Figure 1: Median and mean bot visits per page by treatment arm. The Markdown group shows a slight directional advantage, but statistical tests indicate this difference is consistent with random noise.

Put simply: we can't say with confidence that Markdown actually drove more traffic.

The ChatGPT signal

There was one exception worth noting: ChatGPT-User, the bot triggered when someone uses ChatGPT's web search feature.

ChatGPT-User made up about 74% of all bot traffic in our experiment, and it was the only bot that showed a consistent directional advantage for Markdown (~+20%).

But even here, the signal wasn't statistically significant. When we looked at the distribution, the effect was concentrated in pages that already had high bot traffic, the Markdown group pulled ahead mostly at the 60th percentile and above. Below the median, the two groups were nearly identical.

Figure 2: Traffic composition by bot family. ChatGPT-User dominates, with Meta/Facebook a distant second.

Why we think this is the case

Our hypothesis: LLMs are already extremely good at parsing HTML. Google's John Mueller has stated: "LLMs have trained on read - & - parsed normal web pages since the beginning. It seems a given that they have no problems dealing with HTML." Bing's Fabrice Canel echoed the sentiment, noting that "AI makes us great at understanding web pages" and questioning the value of maintaining separate bot-viewed content that "humans eyes" never help fix.

These models have been trained on billions of web pages, and the overwhelming majority of the internet is published in HTML. OpenAI, Anthropic, Perplexity, and others have spent years optimizing their crawlers and extraction pipelines to handle messy, nested, JavaScript-heavy HTML at scale.

Markdown might be cleaner in theory, but in practice, it's not clear that cleanliness translates to a crawl advantage, at least not yet.

The internet is transforming toward agentic-first experiences, but we're not fully there. Today, most businesses still publish HTML. LLM providers are companies racing to ingest as much of the internet as possible, and what they're ingesting is overwhelmingly HTML. They're built for it.

What this means for you

Create useful content

Instead of chasing technical tricks, focus on what's always worked: creating genuinely useful content that serves your audience.

Expectation: Not a silver bullet

Even in the most optimistic reading of our data, any effect is likely small. We can confidently rule out "slam dunk" gains, if Markdown were driving 40%+ increases in bot traffic, we would have detected it. The data suggests the real effect, if it exists at all, is much more modest.

We'll revisit this

LLM crawler behavior is evolving fast. What's true today may not hold in six months. As bots get smarter, as more sites adopt Markdown, as model providers refine their content preferences, the calculus could shift. We're planning to re-run this experiment later in 2026 and will update our guidance if anything changes.

A note on methodology

This was a randomized controlled experiment, the gold standard for causal inference. We didn't just compare two different sites or time periods; we randomly assigned pages within the same sites to ensure any differences we measured were due to the treatment, not outside factors.

Figure 3: Bot traffic is inherently volatile. To put the experiment results in context, the table below shows how much daily lift fluctuated across three time windows. Even before the experiment launched, during the implementation period (when bot-detection tooling was being deployed but Markdown was not yet served), average lift was already +12%. This suggests some of the directional advantage observed during the experiment may reflect pre-existing noise rather than a true Markdown effect.

That said, we were limited by sample size. To reliably detect a modest effect (e.g. ~10%) we need more pages in each treatment arm.

But here's the flip side: if Markdown were a game-changer, we would have caught it. Our experiment was powered to detect effects above 40%. Anything larger would have shown up clearly. It didn't.

For full statistical details we will be releasing a full-fledged paper.

Bottom line

We tested the claim that serving Markdown to AI crawlers drives more bot traffic. The data doesn't support it, at least not at a scale that would justify treating it as a priority.

If you're optimizing for AI visibility, focus on the fundamentals: high-quality, crawlable content; clear structure; fast load times; and making sure bots can actually access your pages. The format you serve them in? Probably not the leverage point you're looking for.

At least not yet.

Get started with Agent Analytics in your Profound dashboard or reach out to our sales team.