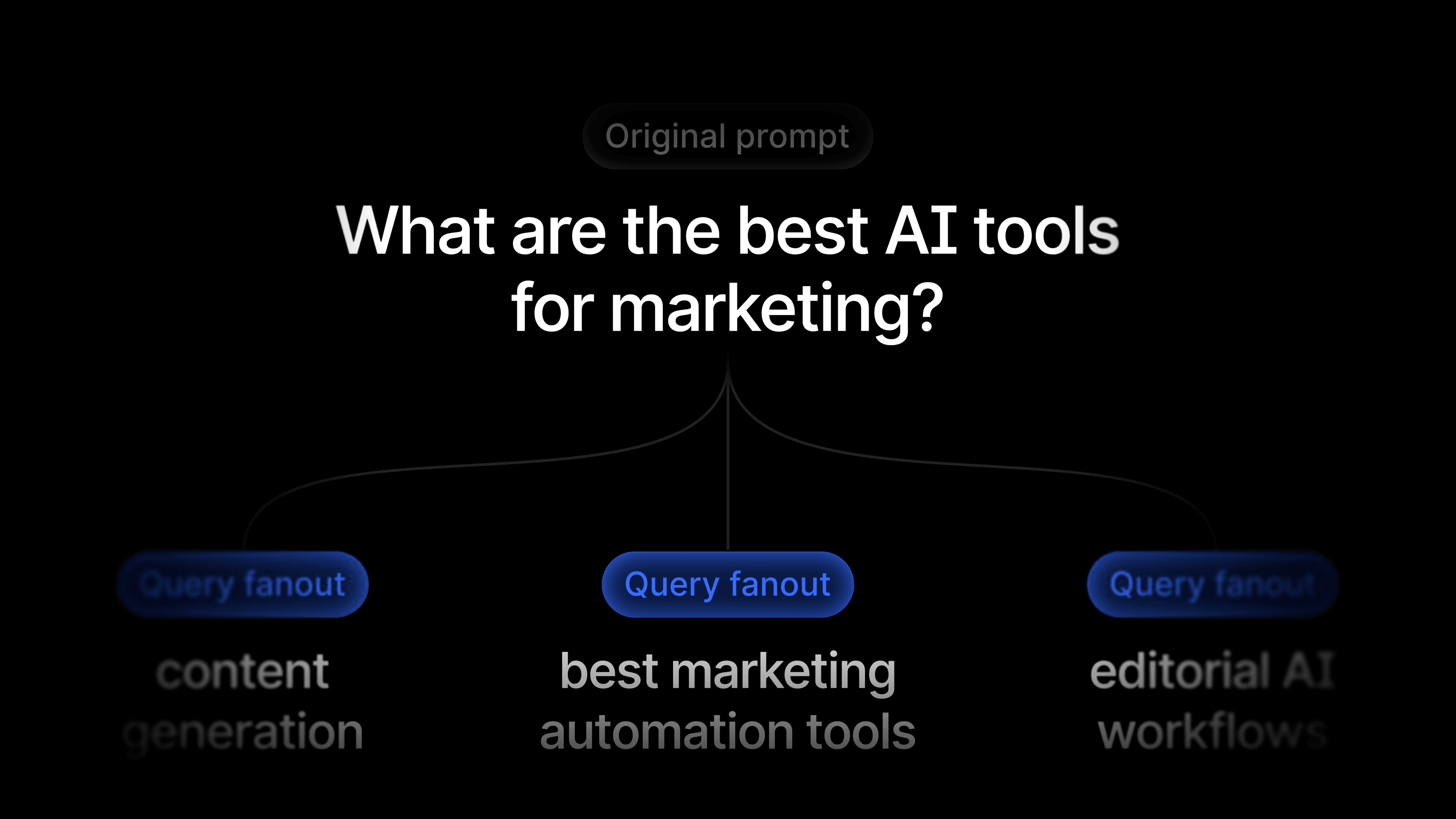

When someone asks ChatGPT, Claude, or Gemini a question, the model rarely “knows” the answer. Before these Answer Engines respond, they fan out the user’s prompt into multiple high-intent search queries, retrieve information from the web, and then synthesize everything into a final answer.

For example, if a user asks:

“Which business bank account is best for startups?”

The model might break this into queries like:

- “best business checking accounts for startups 2025”

- “online business banking comparison fees”

- “startup bank account requirements”

This hidden step, the Query Fanout, determines which brands get pulled into AI answers, and which get left out.

Today, we’re making that process fully transparent.

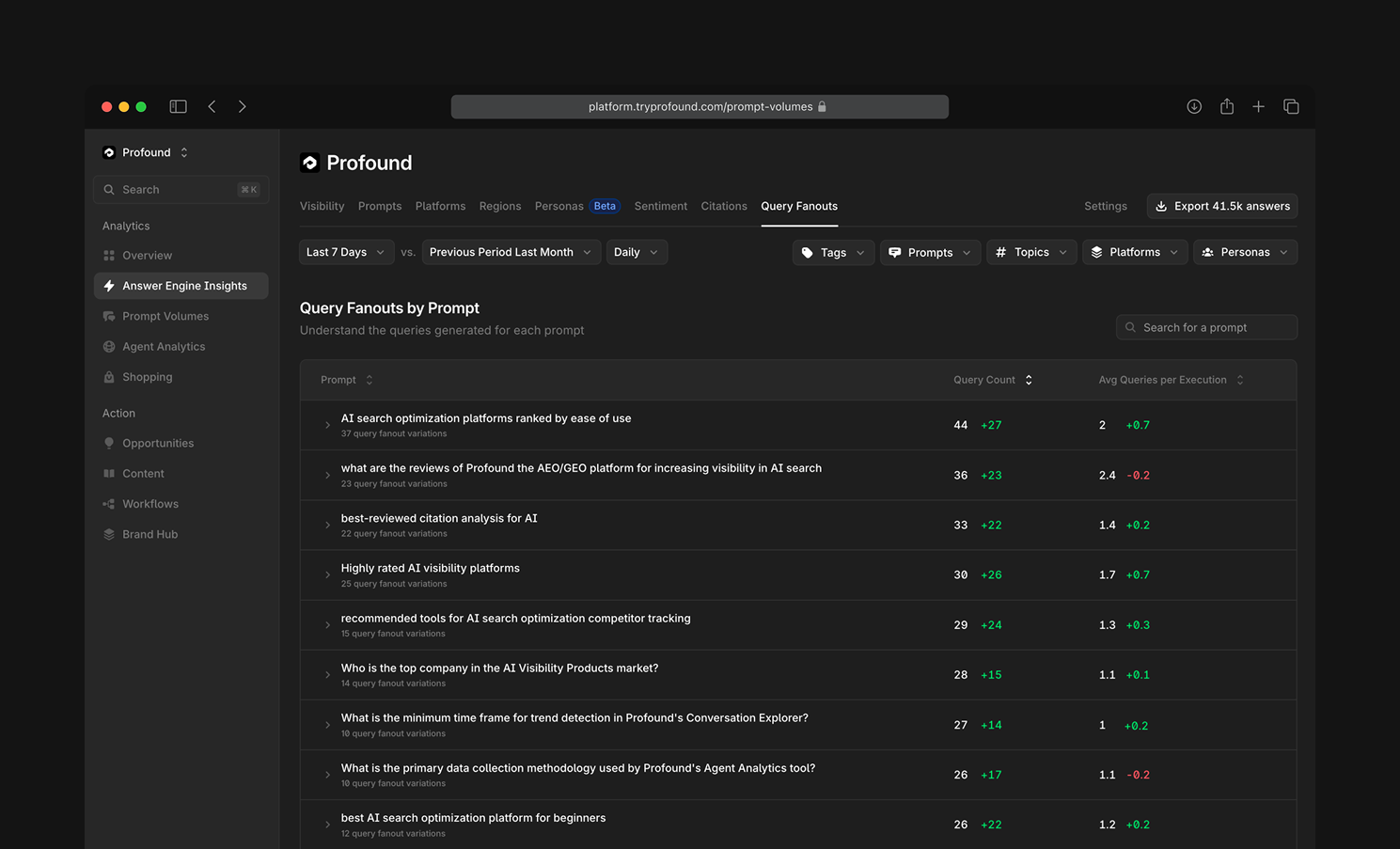

You can now explore all query fanouts for the prompts you track in Profound through the new Query Fanouts page, designed to help AEO teams understand how Answer Engines interpret prompts and uncover the exact queries they use to research your category.

Accessing the query fanouts page

Query Fanouts now lives inside:

Answer Engine Insights → Query Fanouts

The page displays every tracked prompt that has produced a fanout, along with:

- Total Query Count

- Average Queries Per Execution

- Period-over-period changes (green/red indicators)

This gives you an instant snapshot of how often Answer Engines are expanding your prompts, and how that behavior is shifting over time.

Expanding a prompt reveals a preview of the top query variations used in its fanout, sorted by how often each query appears. This allows you to understand exactly how Answer Engines reword, refine, and reinterpret the original user prompt. It’s a powerful way to understand:

- How the model reframes the intent

- Which modifiers are added (e.g., “best,” “review,” “2025”)

- Which variants show up most often

- How many distinct queries your prompt produces

This quick view alone helps identify content gaps or mismatches between what users ask and what Answer Engines actually search for.

Analyzing query fanouts for a prompt

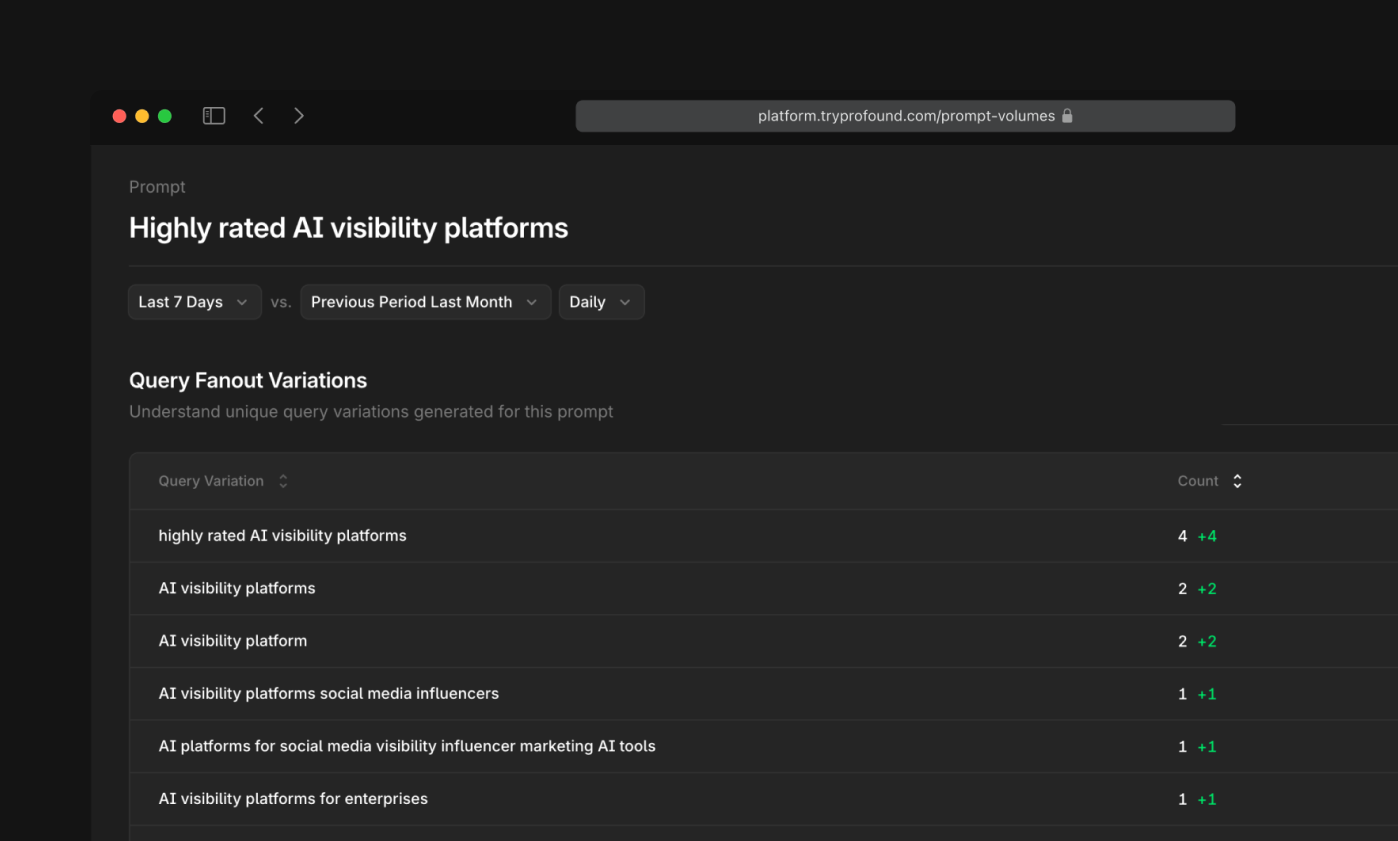

Selecting Analyze on any prompt or choosing View all beneath its query variations opens the Query Fanout Analysis view for a specific prompt.

This dedicated page provides richer insight into how the selected prompt transforms into queries.

Query Variation Breakdown

See every unique query variation generated from your prompt, along with how frequently each one occurs and how its share is shifting over time.

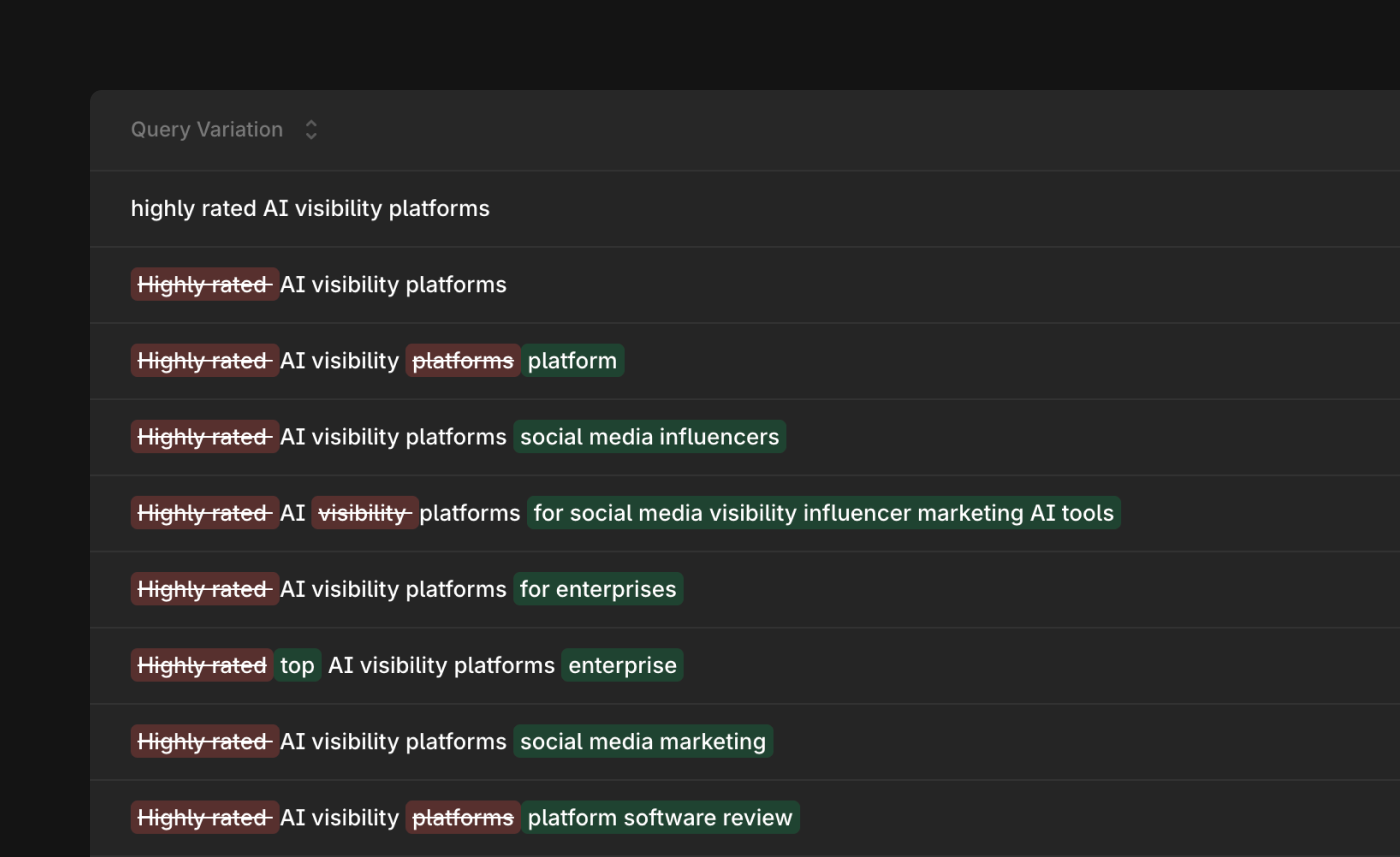

You can also view the diff between the prompt and each query to see exactly how it was transformed, making it easy to spot influential variants and emerging trends in how Answer Engines interpret your prompt.

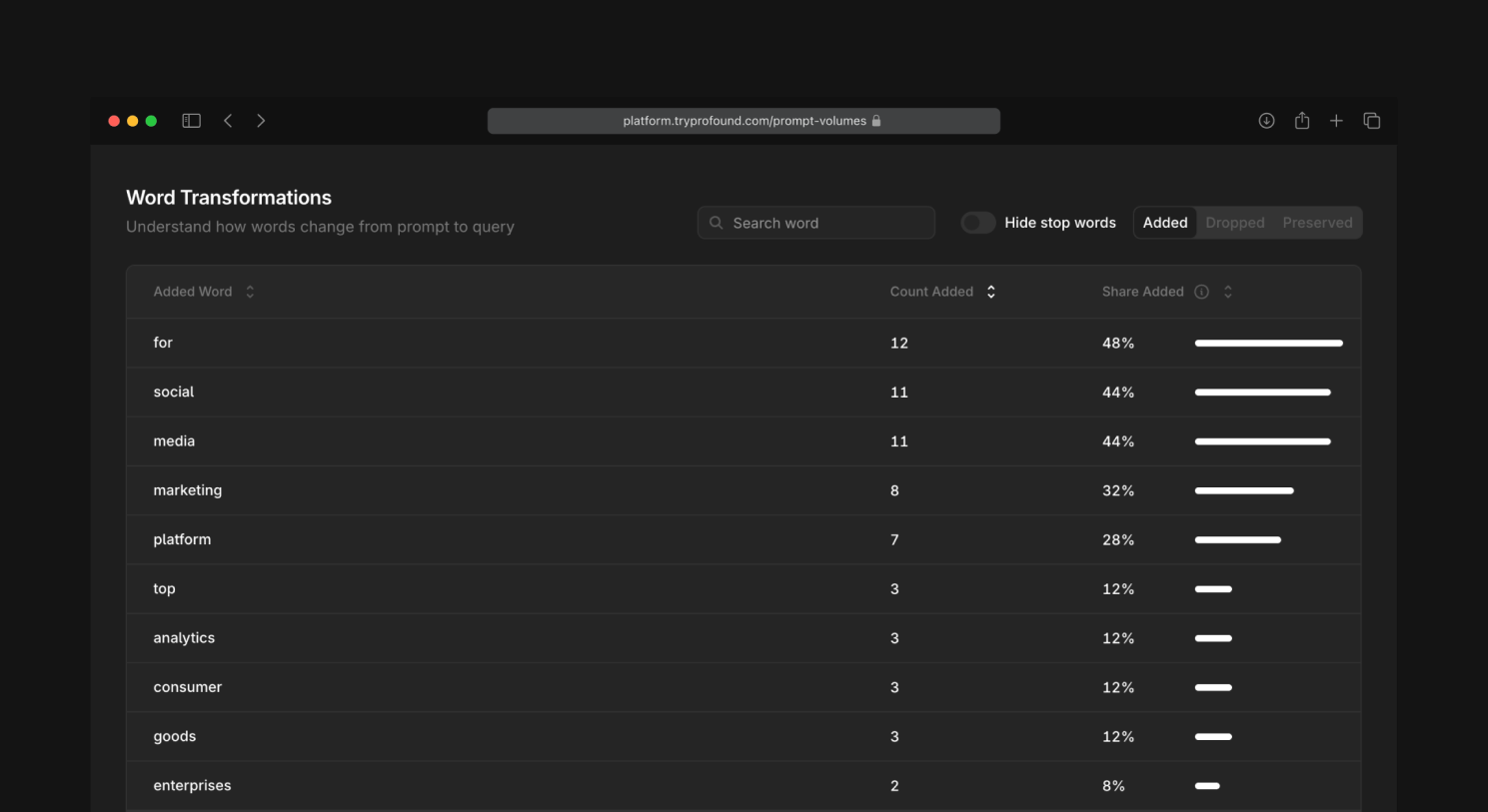

Word Transformations

This view highlights the exact words Answer Engines add, drop, or preserve when transforming a prompt into queries. It’s a fast way to identify important modifiers, competitor mentions, and category terms the model consistently introduces. You can also hide stop words (such as the, was, and of) to focus on the terms that actually matter.

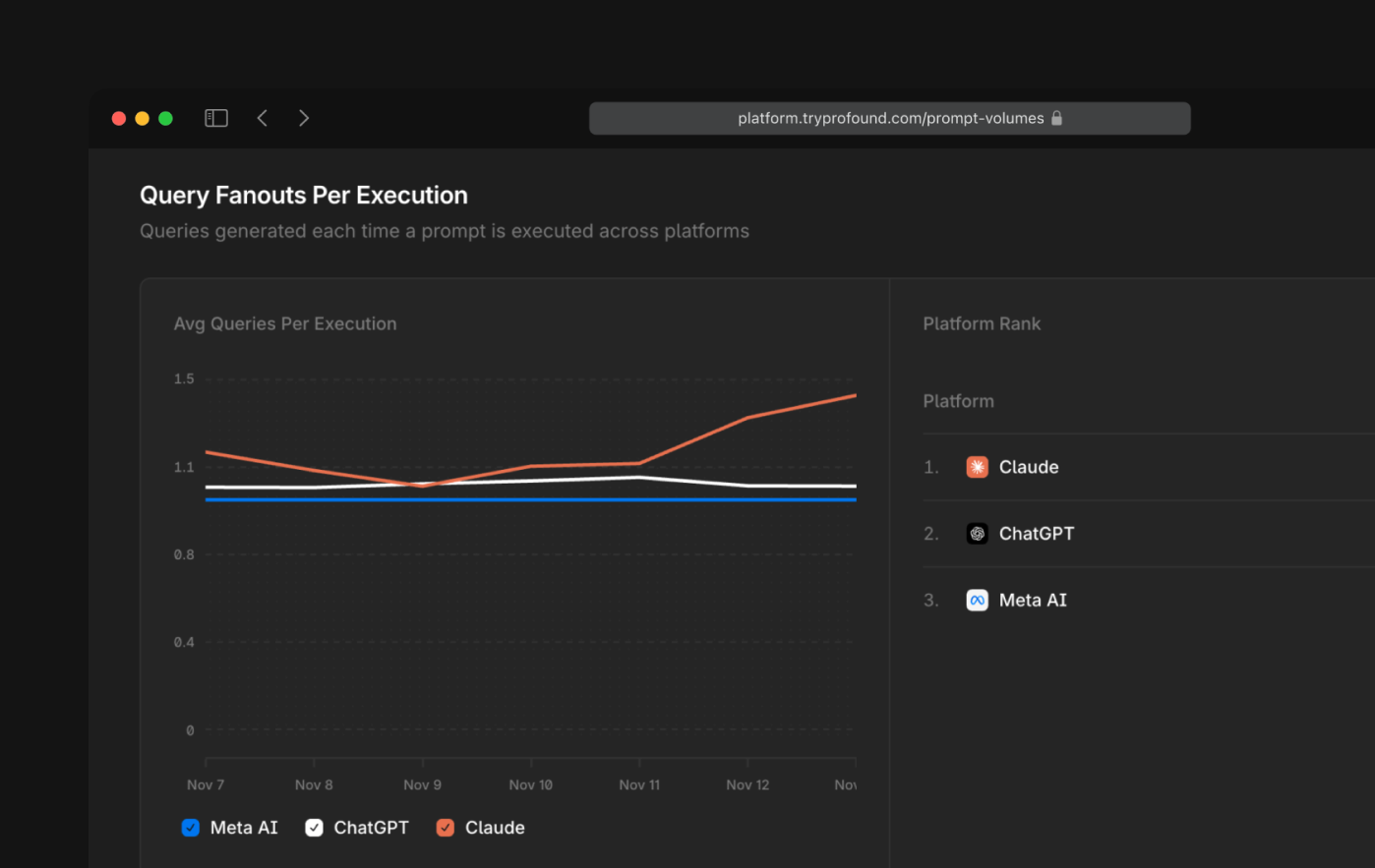

Fanouts Per Execution

Fanouts Per Execution shows how many queries each Answer Engine generates on average when answering your prompt. This helps you compare engines and understand which ones explore broader or deeper intent patterns.

Why this matters

In 2025, creating content without considering query fanouts is like doing SEO without knowing your keywords.

Answer Engines don’t simply evaluate a user’s question – they transform it into a set of richer, more targeted search queries. That’s what they actually use to decide which sources to cite.

If your content doesn’t rank for what the model searches, you won’t appear in what the user sees. We’ve written previously about how this works in practice in our breakdown of ChatGPT’s query fanout behavior.

At Profound, we analyze this behavior at scale, running millions of prompts through Answer Engines every day and capturing both the answers and the underlying fanouts. This gives AEO teams a first-of-its-kind window into:

- How AI interprets user intent

- What queries drive citations

- Which modifiers matter most

- Where content gaps and opportunities exist

With Query Fanouts, you can stop guessing how Answer Engines reason about your prompts and start optimizing for how they actually search. Get started by visiting Answer Engine Insights in your Profound dashboard, or contact our sales team for a demo.